Scientists from the University of California, Berkeley and the University of California, San Francisco, adopted a new method of creating speech from brain signals, which could improve communication for people with speech impairments.

Five patients had light square pads placed on their heads, each lined with hundreds of electrodes that registered movement in patients’ vocal tract with speech. Electrodes are electrical conductors that help guide electricity to enter or leave a region.

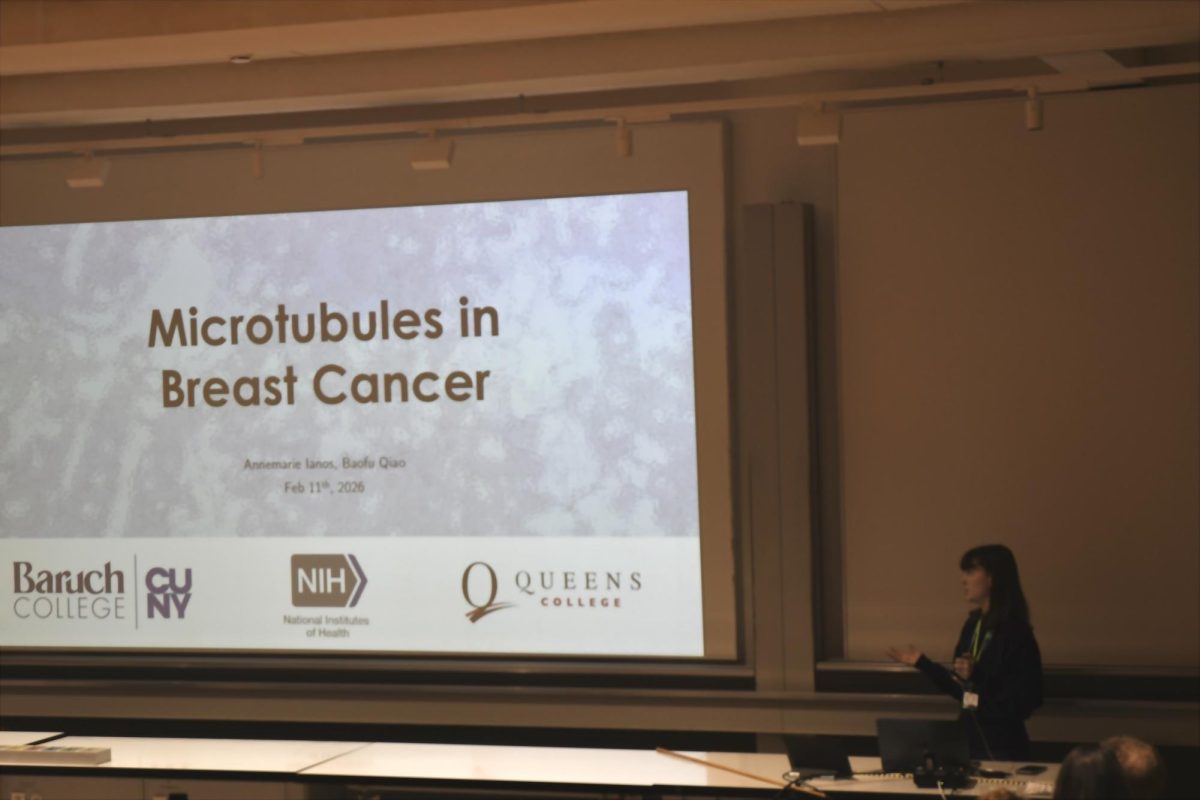

The patients were from the UCSF Epilepsy Center, a center focused on the treatment of patients with seizures. The five patients were all able to speak in English fluently and without help. They were asked to read aloud hundreds of words while areas of the brain used for language construction were recorded.

After the patients were done, scientists reverse engineered the vocal tract movements of the patients to create speech, connecting sounds from both the recordings and anatomy.

The movement of the tongue, jaw and lips were used to make a virtual voice using the same movements the patients had in order to speak.

The virtual voice used two systems, one that connected words to vocal movements, and a second that created speech using the same vocal movements.

After the construction of the virtual voice, data showed that it was faster at constructing speech than other forms of implant-based communication systems, with a speed of 150 words a minute, in comparison to eight words a minute via other forms.

This form of speech construction can be used to help those with damaged vocal cords or people with the inability to speak but can move their mouths.

The research, while being focused heavily on linguistics, can help people understand what goes into creating speech and with the virtual speech technology available, communication can be assisted with just the movements.

Currently, many people with conditions like Lou Gehrig’s Disease, known as ALS, or other conditions that deteriorate muscle and motor functions, have to communicate by substituting vocal tract movements as some cannot move the necessary components.

Stephen Hawking is an example of the great measures people will take to communicate. Hawking used a light infrared scanner that would pick up slight muscle movements from his cheek. On his wheelchair he had a screen that would have letters from which he could choose and he would move his cheek muscle to click when the letter he wanted was selected. His hardware and interface were outdated for most of his life but with patience he accomplished his work.

Unable to use his lips, Hawking would have been unable to use the interface the research team at UC San Francisco and UC Berkeley created.

Every case is different, when looking at the brain areas that patients used to construct language, as researchers found differences in each one.

The process is much faster than the clicker-like system like Hawking used, but limits the amount of people that can use it. Despite the limitations, the process is still a great success for people in the future with speech impairments.

The limitations push for further development on just the use of brain waves and eventually could lead to users not having to move a muscle.

Recordings of the virtual voice are clear enough to understand what is being said and contain minimal errors, showing possibility for the future.

The development of the new interface is being worked on by researchers to add voices to better reflect the person using it and is being moved to clinical trials for people without a voice.

Painful and time-consuming methods of speech won’t be in regular use with the improvement of brain-machine interface technology. Speech impairments may soon become obsolete with the utilization of the research completed by researchers at UC Berkeley and UC San Francisco.