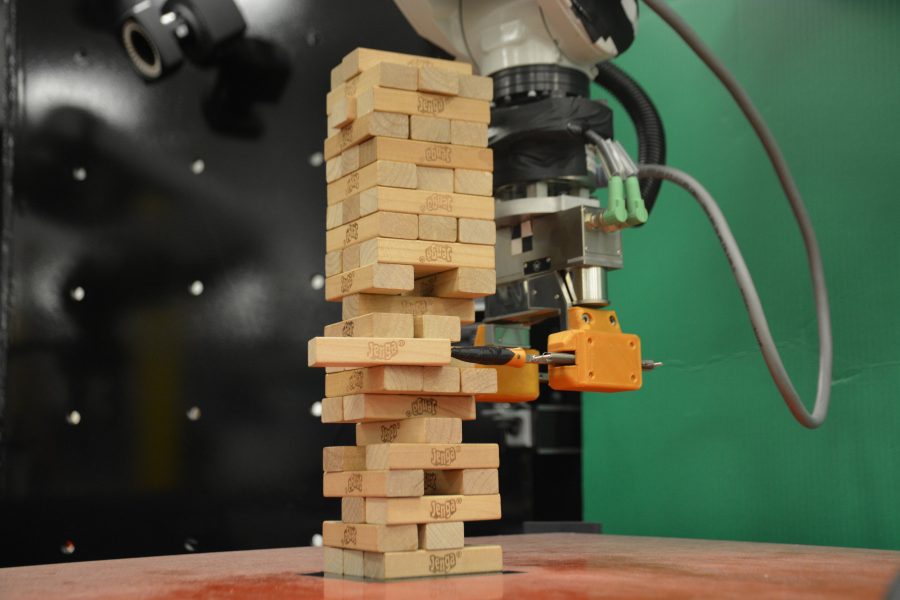

A robot developed by MIT engineers is learning how to play Jenga in an attempt to gather more information on how the process of machine learning can be improved.

The robot consists of a simple arm with three additional parts that detect tactile and visual stimuli, allowing the robot to adjust its movement based on its environment. The first of these additional parts is a soft-pronged gripper added in order to properly allow the robot to play Jenga in the first place. The robot also has a force-sensing cuff and an external camera that allow for the machine to look at and feel the tower’s individual blocks. The machine uses these tools to adjust its course by either pushing the blocks farther or removing them so that it can then place them at the top of the tower, like a traditional Jenga game, before moving onto the next block.

Details of the robot and its adventures playing Jenga are recorded in Science Robotics. In the published study, the purpose of studying the robot was to circumvent the method of machine learning that is currently used, which is incredibly expensive and elaborate. The original method would have the robot learn by computing every single possible interaction and outcome of the 54 individual blocks of the Jenga tower.

The researchers decided to come up with a simpler, more data-efficient way for the robot to interpret the world around it, which is more akin to what humans, and specifically human babies, do to learn.

Babies perform an action without any knowledge of what they’re doing and then learn whether to commit the act again based on whether the action produces a beneficial response or not. The difference between the baby and the machine is simply that the baby has many ways to sense its environment, ranging from its vestibular sense — the sense of balance and spatial orientation — to smell and taste.

The robot that was worked with at MIT only had tactile and visual simulation available to it and learned accordingly.

The robot randomly interacted with a block, used the tactile and visual stimulation it received from the environment, and logged whether the attempt was a success. Based on these, the robot made more informed decisions for the next time it interacted with a block on the tower.

The machine learning described here makes a leap in the field by showing that machines can learn in much the same way that humans do.

The question of why the robot played Jenga specifically as opposed to a strategic game like chess was answered in the study by Alberto Rodriguez, Walter-Henry-Gale Career Development Professor in Mechanical Engineering at MIT.

“Unlike in more purely cognitive tasks or games such as chess or Go, playing the game of Jenga also requires mastery of physical skills such as probing, pushing, pulling, placing, and aligning pieces,” Rodriguez wrote.

“It requires interactive perception and manipulation, where you have to go and touch the tower to learn how and when to move blocks. This is very difficult to simulate, so the robot has to learn in the real world, by interacting with the real Jenga tower. The key challenge is to learn from a relatively small number of experiments by exploiting common sense about objects and physics.”

In terms of how a robot measured up to humans in playing Jenga, Miquel Oller, one of the researchers, reported that they “saw how many blocks a human was able to extract before the tower fell, and the difference was not that much.”

This type of robot is likely most reliably going to be used for factory purposes in an assembly line. The combination of tactile and visual learning would improve on the current assembly line robotics, because the current work does not allow room for progress in terms of the robots learning new skills and improving what they can do. They merely complete a motion countless times until they break, regardless of whether there is a change in any variable in the environment.

If there is an actual change, current mechanical robots don’t change up their behavior.

However, this study has taken the first step toward making it possible for mechanical machines to use sensors in order to learn about changes in their environment and take the appropriate measures to adjust their actions.