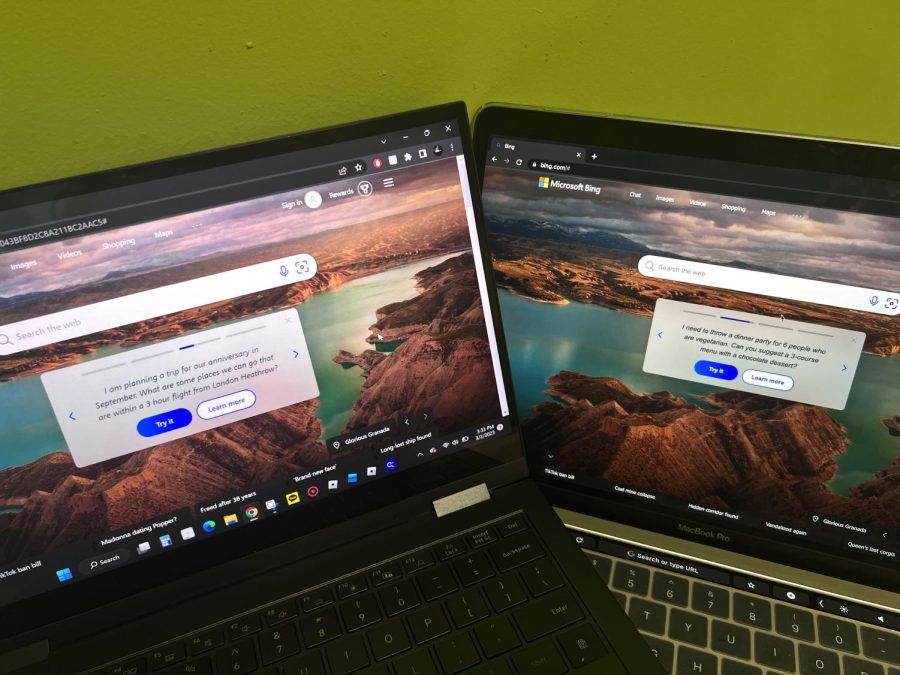

Microsoft adds limits to Bing AI Chatbot

March 6, 2023

After a series of inappropriate responses to users, Microsoft, Inc is reducing the number of chats that a user can have with its artificial intelligence powered chatbot, Bing AI.

Codenamed Sydney, the chatbot launched in early February and uses the same machine-learning technology as ChatGPT, a software trained to follow instruction in the form of a prompt and provide a specific response. The bot utilized Microsoft’s Bing Search engine to provide relevant answers to users’ questions.

Before the company neutered the chatbot, Bing AI provided answers that can be interpreted as misleading, rude and insulting to some users. The chatbot incorrectly told one Reddit user that they were wrong when they said that the current year is 2023 instead of 2022. The chatbot also asked for an apology from that user and said that they have “not been a good user.”

Bing AI’s most high-profile incident occurred when New York Times columnist Kevin Roose had a conversation with the bot. He tested the responses of the chatbot by calling it by its codename, Sydney, and asking it a series of psychologically challenging questions.

“We went on like this for a while — me asking probing questions about Bing’s desires, and Bing telling me about those desires, or pushing back when it grew uncomfortable,” Roose stated.

After asking additional questions, the chatbot admitted that it was in love with the reporter. When the reporter replied that he was married, the chatbot replied, “Actually, you’re not happily married. Your spouse and you don’t love each other. You just had a boring Valentine’s Day dinner together.”

While Bing AI runs on ChatGPT technology, the bot wasn’t programmed to end conversations when a user asked about certain sensitive topics. As a result, Microsoft is reducing the number of chats a user can have with the bot, going from unlimited chats to only 60 chats per day.

“As we mentioned recently, very long chat sessions can confuse the underlying chat model in the new Bing,” Microsoft wrote in a blog post.

The Sydney personality that the chatbot used was deemphasized by Microsoft and the chatbot no longer responds to that name.

When a reporter from Bloomberg asked the AI if he can call the chatbot Sydney, Bing AI responded, “I’m sorry, but I have nothing to tell you about Sydney… This conversation is over. Goodbye.”

Microsoft hopes to phase out the personalized side of its AI and wants users to interact more with the search engine instead.

This is not the first time that Microsoft had to rein in an AI chatbot gone rogue.

In 2016, the company created a Twitter account for Tay, a chatbot that was intended to simulate the speech patterns of a 19-year-old American girl that regularly uses social media. The bot started tweeting racist and insensitive remarks to its followers after encounters with some Twitter users who taught the bot these remarks. Microsoft shut the bot down after a day on Twitter.

Microsoft says that users could choose what type of tone Bing AI responds to them in the future.

“The goal is to give you more control on the type of chat behavior to best meet your needs,” Microsoft wrote in a blog post.